“AI is the goal; AI is the planet we’re headed to. Machine learning is the rocket that is going to get us there. And Big Data is the fuel.” – Pedro Domingos

General Introduction

The Internet of things (IOT) and Artificial Intelligence (AI) has just provided a long-awaited aid to humans in the way we function our machines. They provide us a unique combination that has resulted in various solutions to a wide range of problems. These systems can be used in the workplace to reduce costs by helping the employees work more efficiently and effectively and, in some cases replace the need of physical people when large amounts of data need to be evaluated and these systems have a minimal scope of mistake. These giant systems can handle an enormous amount of data and can provide support in activities like assisting in decisions, human labour, and analysing a block of data. The smart devices interrelate to each other and can integrate the data from different sources. However, there is a need to harmonize and ground the data so that it is easily compared if the need arises. From an analytical point of view, this is the first step in the implementation of the Internet of Things to handle the real-time data with an immense variety.

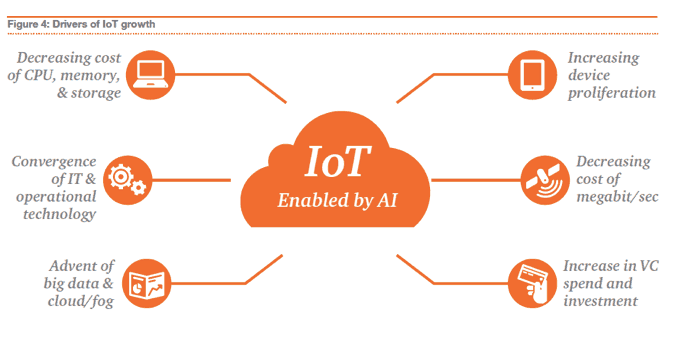

Since the last few decades, magnificent growth has been observed in the field of IoT. By 2020 more than 50 billion devices have been connected, which constitutes 8 devices per person. All of the applications of IoT are practiced with Artificial Intelligence (AI) and Machine Learning (ML). The concepts of AI and ML are not new but are actively under research and experimentation. In respect to the IoT, devices are called Intelligent if they can SENSE>THINK>ACT. ‘Sense’ which means perform operations to collect the data from the surroundings, ‘Think’ refers to if that data can be processed over and over to find some common patterns, and ‘Act’ if it can act as per to the situation within the environment without any human intervention. In other words, intelligence will be regarded as “the ability to use intellectual capabilities that is marked by the use of logic to solve problems”.

Technical Information

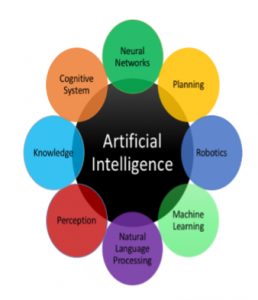

Mathematician Alan Turing’s paper “Computing Machinery and Intelligence” (1950), establish the goal and the fundamentals of artificial intelligence, after Turing asked himself “Can machines think?”. AI is a branch of computer science, which deep down basically replicates the human intelligence into machines. In simpler language, AI a combination of algorithms to achieve human intelligence and machine learning is one of the algorithm developed for a AI.

Artificial intelligence falls under two basic categories:

- Narrow AI

It is also referred to as weak AI, it is basically a simulation of human brain which focuses on performing a single task very well which is beyond human brain at times there’s been a numerous advancement in Narrow AI, and it has been a vital contributory to nation’s economy. few examples can be self-driving cars, voice search et-cetra.

- Artificial general intelligence

Now, this is the one which is referred to as strong AI, which we see in science fiction movies used to develop robot. AGI is said to be a machine just like a human being with all the same capabilities and intellectual power. If the current research goes on at the same pace, we can see machines which can be used for any tasks and can also be used for more AI researchers indeed.

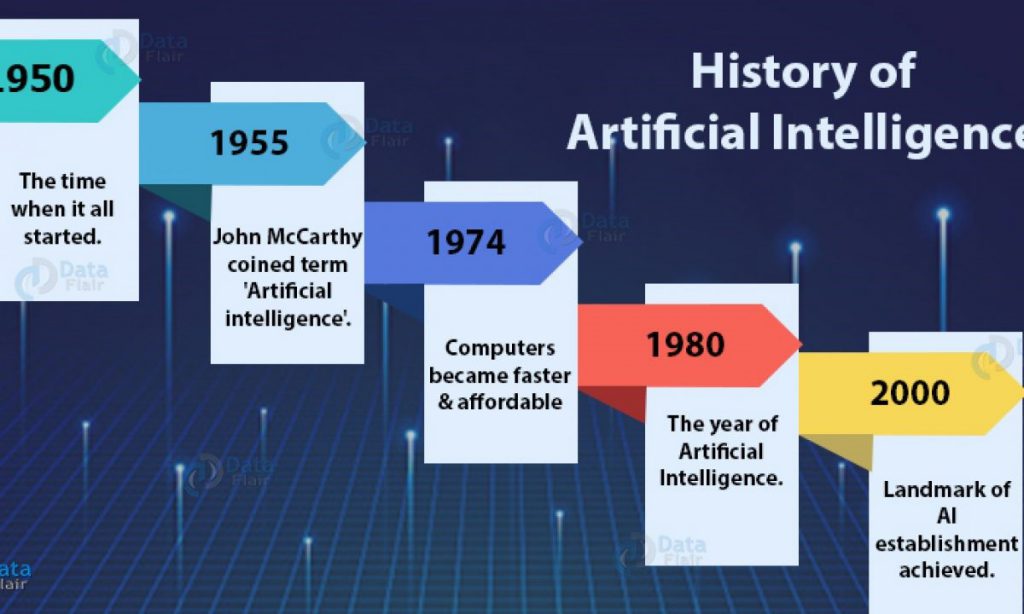

Historical Information

Just because Artificial Intelligence shows sign of human like thinking and also allows machines to act like humans, it is considered to be the best invention in the history of science. Field of AI basically provides answers to 3 “how’s”:

- How to express knowledge

- How to acquire knowledge

- How to apply knowledge

The legitimate idea of AI was introduced in 1956. Since then, several theories and algorithm theories have been put forward and even used for development. AI development can be classified into 2 main concepts. Starting with the approach to develop the computational functions which could possibly replace humans. And the other is looking out for intelligent data exploring systems and using them in the field of Robotics.

Till 2019, there has been significant growth in the field of AI. For instance, in 1966, contributors of AI were successful in developing programmers to handle large mathematical problems using algorithm (ELIZA). Even in 1972, WABOT-1, the first human-like robot was developed with a success. But not always there was an exponential growth, due to shortage of funding, field of AI did experience a period where research on AI was paused and not carried out.

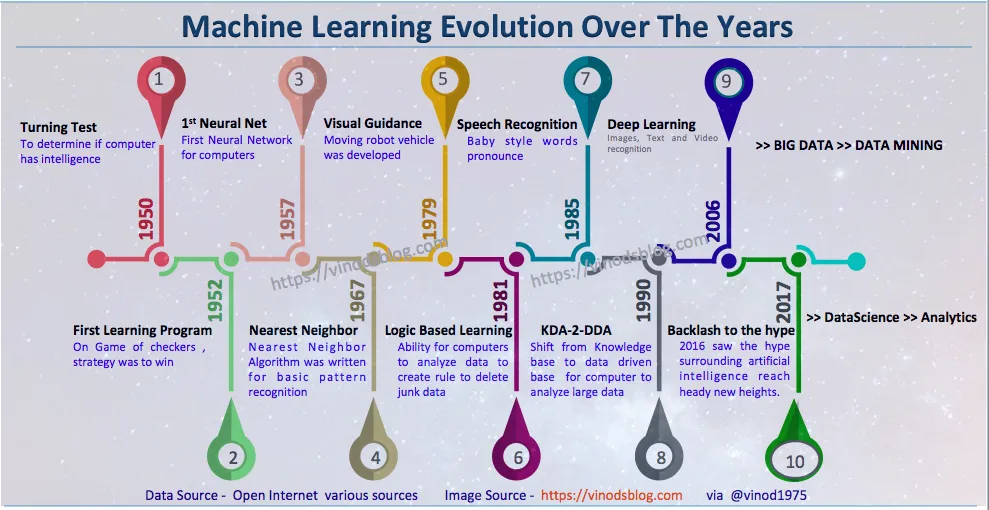

Since 1943, field of Machine Learning has seen a rapid growth and changes in the study. Till building machine like “RBM”, field of ML has experienced a lot. At first there was a study, or a book was published in 1949 which mentions behavior of neural network and brain activity for ML. But now ML is all about analyses and learning from input data used of modeling. Now in 2014, Facebook used 4 million images of users to train and develop a 9 layered deep learning neural network. Also, Google came up with a model to predict things like advertisements, ranking et-cetra, called Sibyl.

Impact of Technology

The growth of development of various technologies is at a rate of knot. And there’s still a confusion that will IoT, AI and ML replace human functions and impact their jobs. It is very interesting that many careers portal has reported that many companies are budgeting around $600 million to fund potential jobs in the field of IoT, AL and ML. Many newspapers refer as ‘Artificial Intelligence – The next digital frontier’ which corelates with the possibility of influx in newer jobs and forcing a need to re-skill the workers. Also, the field provides the solution of shortage of doctors after ‘Deep Learning’ being successful in diagnosing a disease.

For example, a computer can perform certain functions such as Automated Teller Machines (ATMs) have replaced the traditional role of the cashier for standard operation. Technologies will surely impact the society in a negative way. But when we look at examples like the AI tech of Google can assist us and save millions of visions, then surely, the tech will richly reward our society by providing solutions and making our day-to-day life much easier.

Future Advancements or Applications

Most of the research on AI has been performed so as to satisfy business needs, however some of the research has been performed for academic purposes. AI has greatly benefitted from an increase in computing power, and the implication of Moore’s Law is applicable, ‘as with every passing year, a great computing power increase is happening’. This is directly benefitting the AI as higher computing power is essential for itself. Advances in AI algorithms such as speech and image recognition has achieved super-human capability. The error rate in speech and image recognition has decreased from 28% to only 4%. There are proposed systems such as Google and Microsoft that have outperformed most of the AI systems. Another important application of the AI for replacing the barcode.

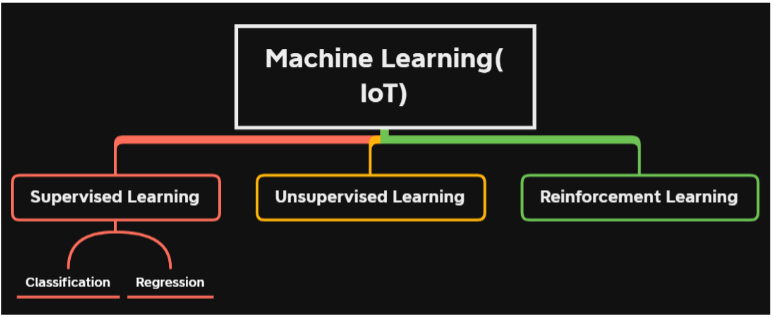

Furthermore, the development of new algorithms of machine learning is also an important contributor to the AI. It is because the internet of things generates a huge amount of data, and it is almost impossible to analyze this data by a human with no faults. Therefore, for this a machine learning program is required that has the intelligence of the human and computational ability of a computer. This mix enables the AI with the internet of things to analyze an enormous amount of data. The present world is the world of big data, and it is a new gold.

Today, in most of the retail houses, bar codes are essential systems that process information for the quick till update. However, at most of the stores, there are some objects such as fruits and vegetables that would not be produced with a barcode and are needed to be put in a plastic bag. With AI, the need for the manual entry and scanning of bar codes can be replaced by intelligent machines such as those using voice and image recognition. These can be used to correctly identify the product and processing the payment either via cash or card. In this application, the camera will be used as a sensor to process an image of the product and the AI system will process the data and perform cross-reference with its input and its stored data and will correctly identify the given item.

There is an enormous amount of data and information that is generated every day including posts on social media, sensors capturing images, videos, texts being uploaded in the form of books and posts, and many other formats such as CSV etc. This amount of data is grounded by the AI and converted into a uniform pattern so that it becomes comparable and can be easily analyzed. From this information, the required trends, such as sentiments about a particular product, for example mobile is generated from the internet of things data, and this data can be used by mobile manufacturing companies to understand the behavior of the customers. If mobile manufacturing companies are able to understand the behaviors of the customers, they will be able to develop the products that the customers want, and in this way, they can increase their revenues by effectively meeting the customers’ demands.

Reference

“IEEExplore Digital Library”, Choice Reviews Online, vol. 47, no. 11, pp. 47-6268-47-6268, 2010. Available: 10.5860/choice.47-6268.

L. Boroczky, Y. Xu, J. Drysdale, M. C. Lee, L. A. Meinel, and T. Buelow, ‘Clinical decision support systems and methods’, US10504197B2

Y. A. Alsahafi and B. V. Gay, ‘An overview of electronic personal health records’, Health Policy Technol., vol. 7, no. 4, pp. 427–432, Apr. 2021, doi: 10.1016/j.hlpt

Ieong, M. (2018). Semiconductor Industry Driven by Applications: Artificial Intelligence and Internet-of-Things. In 2018 IEEE International Conference on Electron Devices and Solid State Circuits (EDSSC), 1-2.

Khan, A. I., & Al-Badi, A. (2020). Open Source Machine Learning Frameworks for Industrial Internet of Things. Procedia Computer Science, 170, 571-577.

Minoli, D., 2020. Special Issue of the Elsevier IoT Journal on Blockchain Applications in IoT Environments. Internet of Things, 10, p.100149.

D. Bhargava, ‘Intelligent Agents and Autonomous Robots’, Rapid Automation: Concepts, Methodologies, Tools, and Applications, 2019. www.igi- global.com/chapter/intelligent-agents-and-autonomous- robots/222476 (accessed 26-Apr-2021).

S. Tahsien, H. Karimipour and P. Spachos, “Machine learning based solutions for security of Internet of Things (IoT): A survey”,Journal of Network and Computer Applications, vol. 161, p. 102630, 2020. Available: 10.1016/j.jnca.2020.102630.